要不使用小书签试一试

quicker有相关动作(查看网页所有图片 - by Qthzrvy - 动作信息 - Quicker)

或者新建一个书签,内容为下面内容

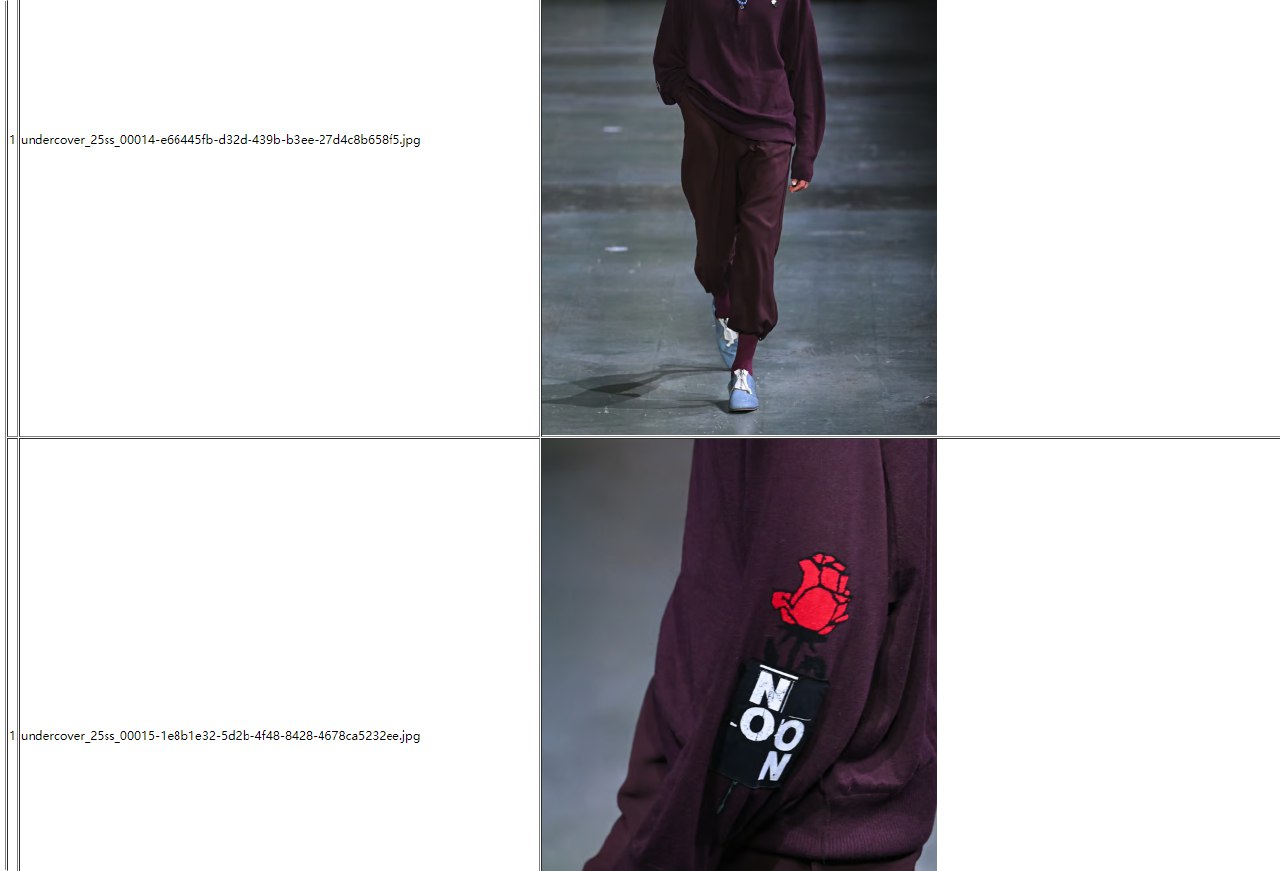

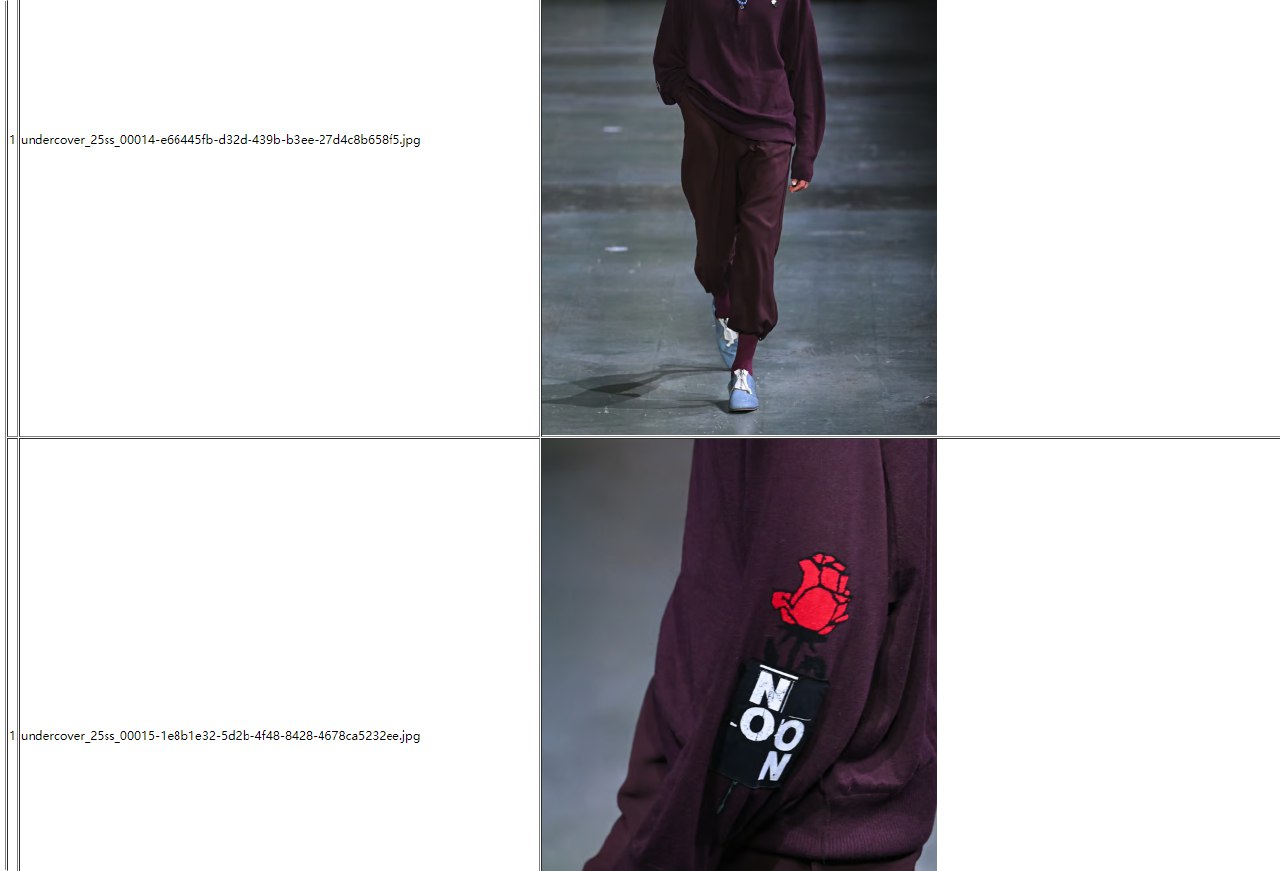

上面的小书签内容为图片,尺寸,下面的小书签内容为文件名,图片,内容

javascript:(function(){outText='';for(i=0;i<document.images.length;i++){var image=document.images[i];var maxWidthStyle=%22max-width:700px;%22;var imageTag='<a href=%22'+image.src+'%22 target=%22_blank%22><img style=%22'+(image.tagName.toLowerCase()===%22img%22||image.tagName.toLowerCase()===%22svg%22?maxWidthStyle:%22%22)+%27%22%20src=%22%27+image.src+%27%22%3E%3C/a%3E%27;if(outText.indexOf(image.src)==-1){outText+=%27%3Ctr%3E%3Ctd%20style=%22width:%20700px;%22%3E%3Cdiv%20style=%22max-width:%20700px;%20overflow:%20hidden;%22%3E%27+imageTag+%27%3C/div%3E%3C/td%3E%3Ctd%3E%27+image.naturalWidth+%27x%27+image.naturalHeight+%27%3C/td%3E%3C/tr%3E%3Cp%3E%27;}};if(outText!=%27%27){imgWindow=window.open(%27%27,%27imgWin%27);imgWindow.document.write(%27%3Ctable%20style=margin:auto%20border=1%20cellpadding=10%3E%3Ctr%3E%3Cth%3EImage%3C/th%3E%3Cth%3ESize%3C/th%3E%3C/tr%3E%3Cp%3E%27+outText+%27%3C/table%3E%3Cp%3E%27);imgWindow.document.close();};var%20previousAlert=document.getElementById(%27clipboard-alert%27);if(previousAlert){clearTimeout(previousAlert.timeoutId);document.body.removeChild(previousAlert);}var%20tempAlert=document.createElement(%27div%27);tempAlert.id=%27clipboard-alert%27;tempAlert.textContent=%27%E6%B2%A1%E6%9C%89%E5%9B%BE%E7%89%87%EF%BC%81%27;var%20alertStyles={%27min-width%27:%27150px%27,%27margin-left%27:%27-75px%27,%27background-color%27:%27#3B7CF1','color':'white','text-align':'center','border-radius':'4px','padding':'14px','position':'fixed','z-index':'9999999','left':'50%25','top':'30px','font-size':'16px','font-family':'sans-serif'};for(var%20style%20in%20alertStyles){tempAlert.style.setProperty(style,alertStyles[style]);}document.body.appendChild(tempAlert);tempAlert.timeoutId=setTimeout(function(){document.body.removeChild(tempAlert);},1000);})();

或

javascript:(function(){var A={},B=[],D=document,i,e,a,k,y,s,m,u,t,r,j,v,h,q,c,G; G=open().document;G.open();G.close(); function C(t){return G.createElement(t)}function P(p,c){p.appendChild(c)}function T(t){return G.createTextNode(t)}for(i=0;e=D.images[i];++i){a=e.getAttribute('alt');k=escape(e.src)+'%'+(a!=null)+a;if(!A[k]){y=!!a+(a!=null);s=C('span');s.style.color=['red','gray','green'][y];s.style.fontStyle=['italic','italic',''][y];P(s,T(['missing','empty',a][y]));m=e.cloneNode(true); if(G.importNode)m=G.importNode(m, true); if(m.width>350)m.width=350;B.push([0,7,T(e.src.split('/').reverse()[0]),m,s]);A[k]=B.length;}u=B[A[k]-1];u[1]=(T(++u[0]));}t=C('table');t.border=1;r=t.createTHead().insertRow(-1);for(j=0;v=['#','Filename','Image','Alternate%20text'][j];++j){h=C('th');P(h,T(v));P(r,h);}for(i=0;q=B[i];++i){r=t.insertRow(-1);for(j=1;v=q[j];++j){c=r.insertCell(-1);P(c,v);}}%20P(G.body,t);})()